–image generated using chatGPT–

Before attending class, I asked chatGPT “write me a critical blog post on the use of AI in education.” This is what it spit out:

The Double-Edged Sword: A Critical Look at AI in Education

Artificial intelligence (AI) is often hailed as the next frontier in education, promising personalized learning, enhanced efficiency, and broader access. While these possibilities are exciting, a critical examination reveals significant challenges and potential pitfalls that should not be overlooked. From ethical dilemmas to the risk of exacerbating inequities, the use of AI in education is far from a one-size-fits-all solution.

Loss of the Human Touch

One of the most pressing concerns about AI in education is the potential loss of the human element in teaching. Education is not just about imparting knowledge; it’s about fostering relationships, understanding emotional cues, and inspiring learners. Teachers bring empathy, creativity, and adaptability to their classrooms—qualities that AI cannot replicate. Relying heavily on AI tools risks reducing education to a transactional exchange of information, undermining the rich, interpersonal experiences that define meaningful learning.

Privacy and Ethical Concerns

The use of AI in education often involves collecting vast amounts of data on students, including their learning patterns, behaviors, and even personal information. This raises significant privacy concerns. Who owns this data, and how is it protected? There are also ethical questions about how AI algorithms make decisions. Bias in AI systems can reinforce existing stereotypes or disadvantage certain groups of students, particularly if the data used to train these systems lacks diversity. Without stringent oversight, AI could inadvertently perpetuate inequality rather than mitigate it.

The Digital Divide

While AI has the potential to democratize education, it can also deepen the digital divide. Implementing AI-powered tools requires reliable internet access, modern devices, and technological literacy—resources that are not equally distributed across schools or communities. Students from low-income families or underfunded schools may find themselves further marginalized as education increasingly relies on technology. In this context, AI could widen the gap between the haves and the have-nots instead of closing it.

Overemphasis on Efficiency

AI is often celebrated for its ability to streamline administrative tasks, such as grading or lesson planning. While this can free up time for teachers, it also raises the question of what is lost when education prioritizes efficiency over depth. Automated grading, for instance, may struggle to assess complex assignments that require subjective judgment, such as essays or creative projects. This focus on quantifiable metrics risks reducing learning to what can be easily measured, neglecting critical thinking, creativity, and emotional intelligence.

Dependency and De-skilling

As educators and institutions increasingly rely on AI, there is a risk of dependency that could erode essential skills among teachers and learners. Teachers may lose the ability to create lesson plans or assess students without AI assistance, while students might rely too heavily on AI tools for answers, undermining their problem-solving skills. Over time, this could lead to a de-skilling of both groups, weakening the foundational competencies that education is supposed to cultivate.

Conclusion: Proceed with Caution

AI in education is undoubtedly a powerful tool with the potential to transform learning. However, its adoption must be approached with caution, critical reflection, and a commitment to equity and ethics. Policymakers, educators, and technologists must work collaboratively to ensure that AI enhances, rather than detracts from, the educational experience. By addressing these challenges head-on, we can harness AI’s potential without compromising the core values that make education a deeply human endeavor.

–the above was generated using chatGPT–

It was very interesting to see the AI essentially criticize itself based on other blog posts and articles out there on the topic of AI in education. I think that it took a very critical approach because of that word in the prompt, but I did honestly expect it to paint itself in a better light. I do, however, agree with many of its points as of right now. The rest of this post will be completed after the lecture, and I will determine if I still agree with the AI’s critical analysis.

I am interested by the fact that ChatGPT did not identify the use of AI for students to cheat on assignments and essays as a potential issue of AI in education, beyond just “dependency.”

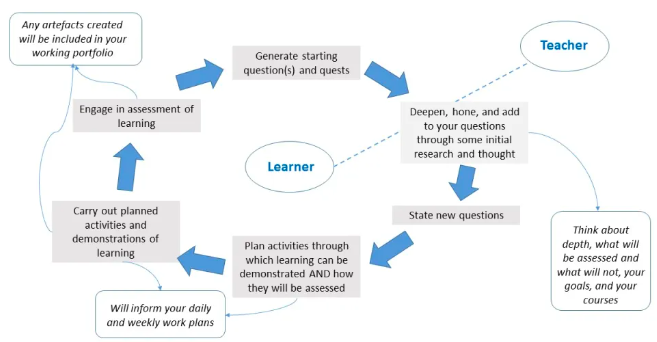

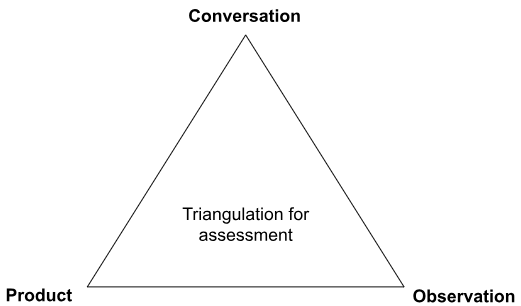

I like the idea of focusing on the learning journey rather than the product to remove this probability of cheating. By emphasizing the journey a student took to get to a product, you can more closely monitor their process and make sure that they are not relying on AI to complete their work.

I never thought about how submitting students’ work to an AI to check for the use of AI is just feeding the AI more data, this time generated by my student. This means that my students’ work is now being used by a company, and the AI is now “learning” from its own work, which I think would lead to pretty weird results.

I also notice that the AI blog post did not tough on the unreliability of AI when it comes to math and other computational errors that AI commits often. That is a huge concern I have when using AI, even using AI in my own work for small projects, so I hope that students are aware of these potential errors. It will be something that I address when I talk about the use of AI with my students (assuming that AI hasn’t improved a thousand times by then).

Finally, the AI did not acknowledge the massive environmental impact that generative AIs have. This is something that I care very much about and it drives me to use AI minimally in my life, so I hope that this is a value that I can impart onto my students.

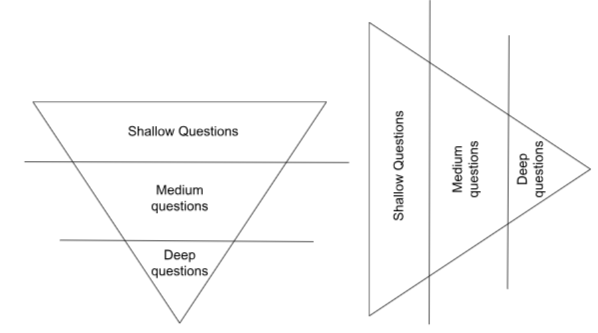

Overall, my approach to AI is going to involve reducing the amount of opportunities that students have to use it, while also educating them on the proper way to supplement their work with AI and the various problems with it. I will have many on paper assignments to limit opportunities to complete online assignments using AI. When I do online assignments, I will require students to show their entire thought process, either by submitting many drafts or by explaining to me the process that led to every decision in their work. I will unfortunately always be critical of student work that seems “too good,” but hopefully once I actually see some assignments done with AI, I will get better at recognizing it and better at motivating my students to avoid it.